“My time at Stanford has centered around a development in image-making that I think is more significant than the invention of photography…”

Since its advent, the photographic frame has allowed us to see other people’s visions of the world. When we look at the oldest-surviving photograph—Nicéphore Niépce’s almost postcard-sized View from the Window at Le Gras—we are looking at an approximation of how Niépce saw the world from a high window in his home, looking out over his estate. While writing (especially novels) had long been able to put readers in the mind of another person, this new invention offered an unparalleled degree of exactitude.

In the intervening years, the wonder of this invention has largely faded, even becoming banal. Today, as we scroll through our social media feeds, we take for granted that many of the posts plunge us into the space behind someone else’s eyes: we consume experiences that are as varied as the number of images pervading the digital realm today; from our friend’s lunch to a photojournalist’s frontline capture, each frame is a frozen instant taken from someone else’s perception of reality. If you pause to think about it, it’s still quite an incredible privilege. And while just about everything has changed about photography since its emergence—its means of production, dissemination and consumption—this basic fact of sharing another person’s perspective has remained largely unquestioned.

Of course, there have long been unmanned photographic methods—the century-old technique of pigeon photography comes to mind, not to mention more contemporary examples of satellite imagery, drone photography and so on—but the fact is that the photographs we most often think about and look at carry a trace of human intention; they expand our view(s) of the world by allowing us to see from another’s point of view.

This is what makes the work of Trevor Paglen so important. As a widely exhibited artist, a PhD in geography, and a very recent MacArthur Fellowship-winner, Paglen has every right to challenge our view of the world. Yet even so, the quote that opens this review could seem a bit grandiose. Time spent with his work, however, reveals that his proclamations are anything but. For nearly two centuries, we have thought about photography as an enterprise undertaken by humans, for humans. But what happens when machines start to photograph? What happens when machines start to see?

***

For years, Paglen’s mission has been simple: to learn how to see the world, especially its invisible parts. He has sought out spy satellites and the vast, unseen apparatuses behind government surveillance. He has investigated the underwater cables that run through the ocean, conveying 98% of the world’s data. To paraphrase Paglen, the very Earth has been turned into a motherboard of communication networks, and his goal is to find the tools that will help him (and us) see this reality more clearly. Given the degree to which these unseen elements (surveillance, data) define how we interact with the world, this is important work.

His latest target: the invisible yet fast-developing technologies of computer vision. Paglen writes, “Over the last ten years or so, powerful algorithms and artificial intelligence networks have enabled computers to ‘see’ autonomously. What does it mean that ‘seeing’ no longer requires a human ‘seer’ in the loop?” This is the fertile ground of Paglen’s latest show at Metro Pictures in New York titled, “A Study of Invisible Images.”

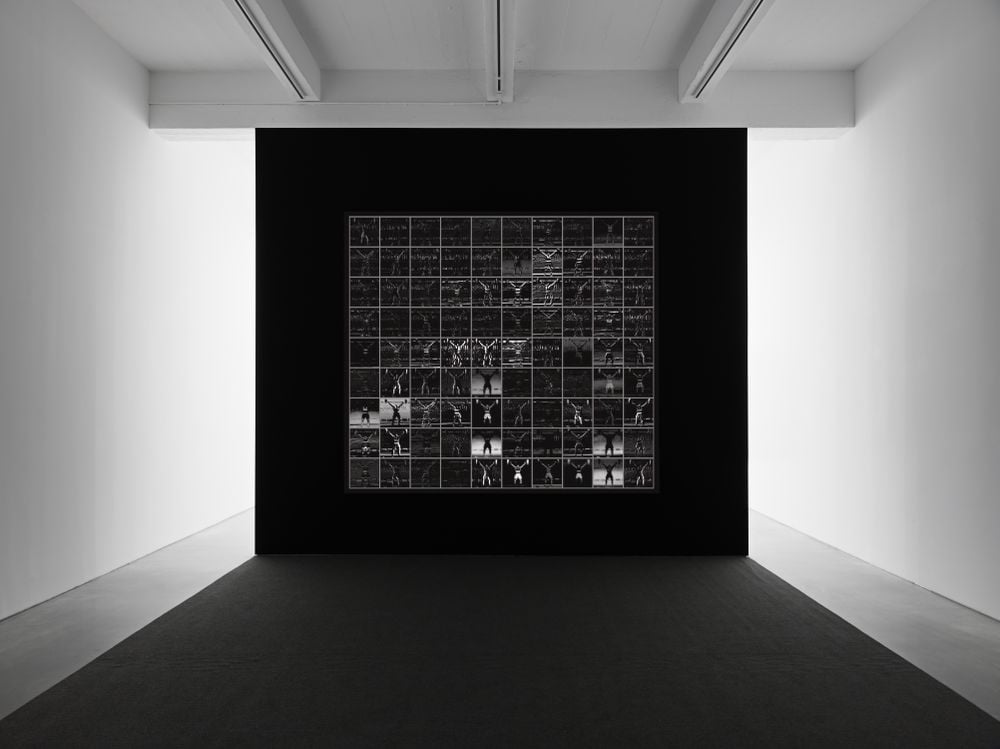

On the surface, Paglen’s investigation of computer vision appears less political than the issues of government surveillance. Yet as his work probes the construction of this increasingly dominant point of view, we begin to understand the urgent and grave implications for our day-to-day lives. These machine-made images will, more and more, define many basic elements of our lives—how we move (self-driving cars), what we consume (automated assembly-lines and fulfillment centers), and even how we interact with one another (facial recognition as a means of social control). And disturbingly, these images will be produced and looked at only by other machines. We have seen the unsettling images of human drone operators turning contemporary battlefields into video game-like digital visualizations—but how will machines see the world and us human beings within it?

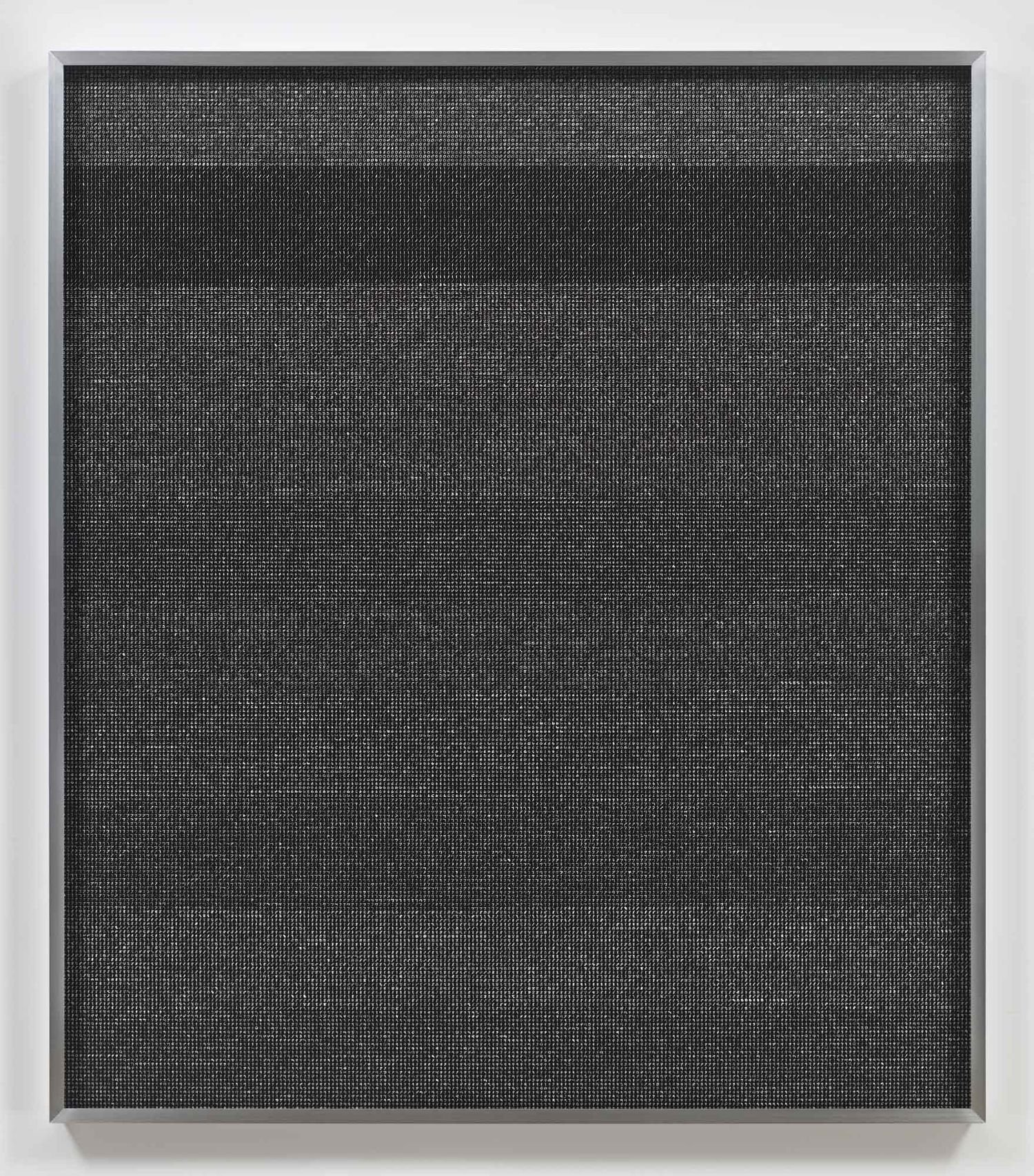

As Paglen writes, “Something dramatic has happened to the world of images: they have become detached from human eyes. Our machines have learned to see [w]ithout us…I call this world of machine-[to]-machine image-making ‘invisible images,’ because it’s a form of vision that’s inherently inaccessible to human eyes. This exhibition is a study of various kinds of these invisible images.”

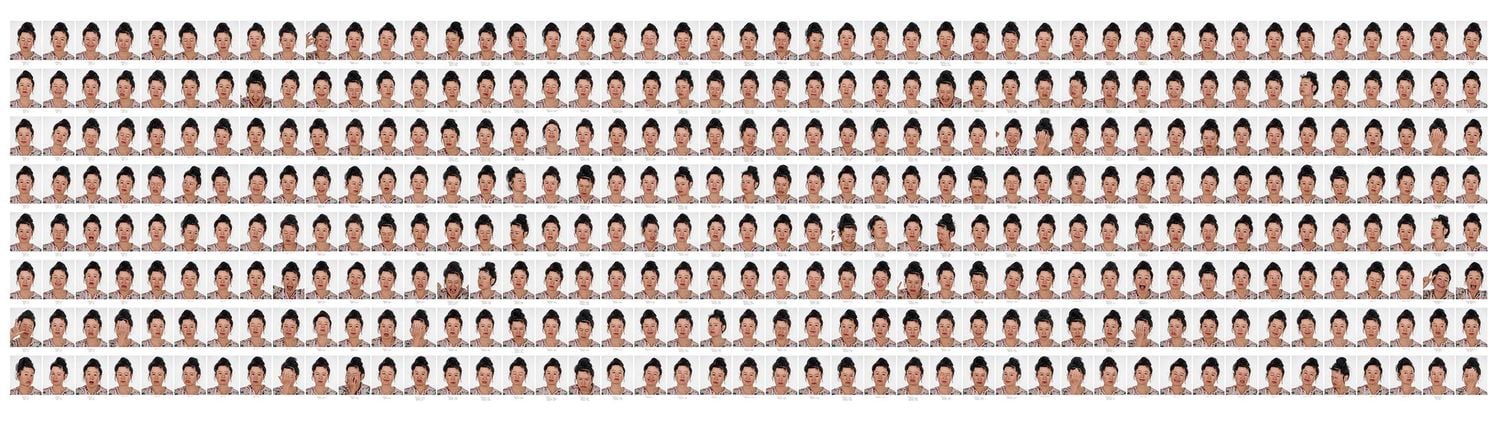

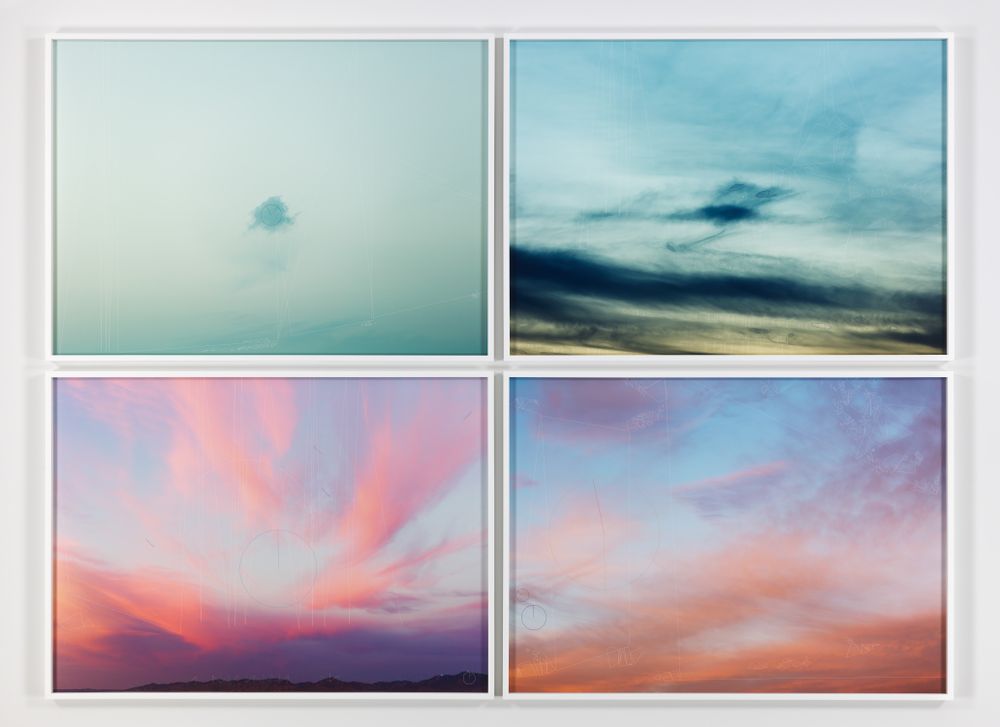

The exhibition, consisting largely of photographs (along with one video), covers a wide range of practical issues—facial-analysis of a human figure, automated object recognition—well as metaphorical ones: for example, an AI trained to look for “monsters that historically have been used as allegories for capitalism” (blood-sucking, industrialist vampires, slowly draining the life out of the working class). Depending on your political persuasion and aesthetic sensitivities, each of these individual pieces will be more or less interesting to you. I was particularly piqued by an AI that was trained to see, exclusively, objects associated with omens and portents throughout history—comets, eclipses, black cats and other harbingers of bad fortune. A machine designed to produce a self-fulfilling, negative view of the world: a pessimistic computer. But more than any individual image, this is an exhibition that amasses significance as you move through the space: it is Paglen’s punishing clarity and calm urgency which steadily accumulate, piece by piece, text by text, and force us to look where we cannot see.

That is, with the exception of the exhibition’s single most affecting work, titled: “Behold These Glorious Times!” This video brings together hundreds of thousands of images and flashes them on the screen with dizzying, yet hypnotic, rapidity over the course of 12 minutes. What are we witnessing; what is this brainwashing? As we learn, these photographic images are taken from training libraries used to teach artificial intelligence networks—in sum, the images used to teach machines how to see. Further, as the video progresses, the images begin to break down into more and more basic forms: black and white grids, subtle arrays of shading. In order to recognize images, AI is taught to break apart each picture, analyze its most basic parts, and then make sense of the whole. This alternatively numbing and captivating process is accompanied by an operatic soundtrack which, itself, is a product of algorithms: the sounds are pulled from an auditory training library that will teach machines how to recognize human speech. Thus, as we sit there, we are not having our brains “washed” but, rather, computerized. 200 years ago we saw what the world looked like to Niépce—this is what the world will look and sound like to its future beholders.

In the gallery, I watched the video from start to finish and began to watch a second time before dragging myself away—it is that entrancing. But what Paglen achieves is more than aesthetic impact: it is a destabilizing, existential message. First, we are witnessing the birth of an important new agent in our constantly interrelated visions of the world (the self, the Other—and now the Artificial Other?). This is a completely alien form of witness that we must learn to negotiate with (even as we continue to grapple with the meaning of (human) documentary vision). This is not merely an academic or intellectual exercise—according to Paglen, the precepts dictating this machine vision must not remain invisible because of their grave, real-world implications. The rules and structure of the fast-developing computer vision are currently up for debate, and now is the time to engage.

As Paglen explains, when artificial intelligence is being taught how to see, it is fed batches of thousands of images that are sorted into various groupings known as “training sets.” This is what humans look like (note: African-Americans have been mistaken for gorillas); this is what doctors and nurses look like (note: computers readily conclude that doctors are always male and nurses always female based on the data they’re given); this is what a woman wearing a burqa looks like (note: apparently humans can get this wrong as well, so who knows).

While there is already enormous bias in the images that we see around the world, we, as humans, have the capacity to critique our own views and try to expand our own “training sets” to challenge our visual stereotypes. While AI itself will not have the same capacity for self-reflection, the real question is whether its programmers and creators will make room for these debates. The basic challenges faced by AI vision will have wide-reaching consequences. For example, imagine the first time a self-driving car has to choose between two children who have run out into the road, one white and one black. If the computer “sees” the black child as a small animal, due to the bias of the training sets it has been given, its choice will be clear.

***

It should be underlined that photography is just one field among many that will be deeply shaken up in the years to come. Indeed, there is a great deal of anxiety across a range of human endeavors where AI is rapidly breaking ground. From the trivial or curious (winning at chess and Go, attempting cooking, churning out sports recaps), to the unsettling (writing poetry and novels, translating with increasing efficacy and grace, inventing new musical sounds, or producing screenplays complete with demographic-data-sourced, crowd-pleasing twists), and even tackling the final frontier—love, it is clear that an artificially intelligent future has arrived in the present.

But even as it concerns the humble medium of photography, the changes will be no less drastic and, I think, wide-reaching. One history of the medium, as put into motion by pioneers such as Niépce and Daguerre, will carry on as long as humans are making and looking at pictures. But an important new realm of image-making and consuming is opening up, and Paglen’s work insists that we look deeply into the computers’ way of seeing and extract this vision from the field of the invisible while there is still room for debate. This is not an issue exclusively for photographers or image-making professionals to take on: given how much we will all depend on our machines’ views of tomorrow’s world, we would all do well to heed Paglen’s call and take a closer look today.

—Alexander Strecker

Editors’ note: Trevor Paglen’s exhibition “A Study of Invisible Images” was shown at Metro Pictures in New York City in the fall of 2017. You can contact the gallery to find out about Paglen’s next exhibition.

For those interested, Google has released an open-source tool that allows anyone to examine the datasets used to train AI. Harvard and MIT have partnered to study the ethics and governance of artificial intelligence. Get involved to help make sure that more efforts like these appear on the horizon.