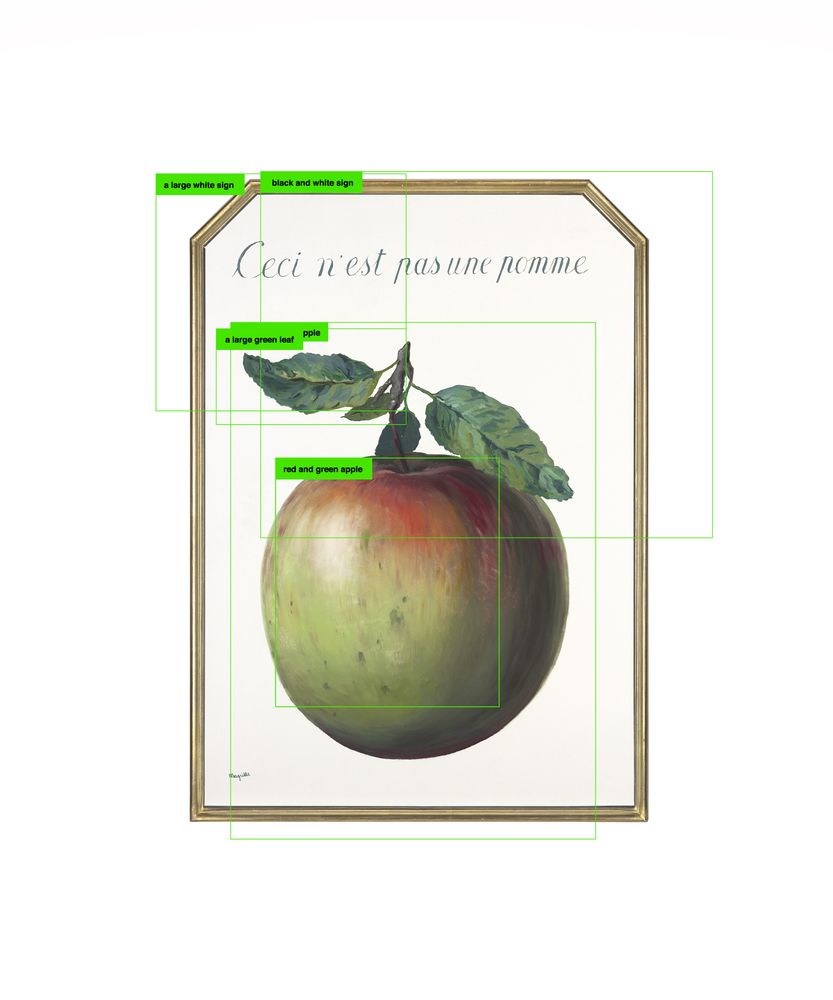

Trevor Paglen’s recent installation of some 30,000 color photographs in the Barbican’s Curve Gallery, From ‘Apple’ to ‘Anomaly’, took its cue from René Magritte’s 1964 painting Ceci n’est pas une pomme, which dismantled the assumed relation between word, image and thing. Magritte’s awareness of the arbitrary nature of the sign has currency in light of the ways in which Artificial Intelligence (AI) machines are now trained to ‘see’ the world.

We began with the relative innocence of descriptive labels and images—“apple”, “apple tree”, “fruit”— but soon the categories and images identified with them demonstrated the more insidious side of this process— “alcoholic”, “debtor”, “drug addict”, “homeless”, “racist”.

Set apart from the mass of photographs and displayed on its own wall, Paglen presented a framed reproduction of Magritte’s painting as it would be labelled for AI networks, with bounding box annotations identifying what is represented within the picture — “a large white sign”, “black and white sign”, “a large green leaf”, “red and green apple”. Here, the process of labelling in its dumb literality remains blind to the joke of the painting itself and a pointer to the inherent problems of AI vision that we were shown through the spectacle of photographs arrayed along the Barbican’s curved wall.

The photographs are taken from ImageNet, a database with currently more than 14 million images and over 21,000 categories and one of the main sources for training AI machine-learning technologies to facially recognize people and things. AI networks are taught to see the world as a result of being fed vast amounts of visual information sorted into various groupings known as “training sets”. The point of Paglen’s installation was to show us the mendacity at the heart of technological developments — the dominant value systems that get encoded within them. Nouns are not always neutral or innocent, images are treacherous, and how we identify images and things contains inherent prejudices and value judgments. We began with the relative innocence of descriptive labels and images—“apple”, “apple tree”, “fruit”— but soon the categories and images identified with them demonstrated the more insidious side of this process— “alcoholic”, “debtor”, “drug addict”, “homeless”, “racist”. The final category “anomaly” included people, many in disguise, masked and in costumes, their identity and classification uncertain.

Paglen’s earlier Limit Telephotography series, photographs of distant secret military bases, as well as his photographs of classified satellites and spacecraft in the earth’s orbit, often resulted in a distinctive formal aesthetic, abstract and diffused images, with their referents having to be signaled through a captioning text. In contrast, here we dwell with people’s social media images, the aesthetic of on-line vernacular photography, uncopyrighted populist images that ImageNet scraped from Google, Flickr, Facebook and Bing.

Paglen showed us images that have been unethically appropriated and used without the permission of those who took them. The work, then, also raises issues about the loss of privacy and control of images posted on line. These images are not hidden or secret, they are common, testimony to how much of the life-world we now photograph— crazy, dizzying clusters and swarms of images, an absurdist visual encyclopedia, ranging from pictures of the sun, sky, dust storms, spy satellites (a clear in-joke), to spam (tins of), false teeth, pizza and banana boats. We know these images, they are not unfamiliar, in many ways they are numbingly banal. The point is however we are not meant to see them like this.

What Paglen showed is a sampling of the images and labels that AI machines are fed in order to begin to visually make sense of the world. And the problem arises when it is pictures of people that are labelled and classified, when assumptions are made about how they look and their behavior. But while Paglen’s spectacular display alerted us to the problems in the process of developing AI training, it still remained silent about an important human dimension and paradox integral to ImageNet—AI machine vision is based on the intensive labor of piecemeal online workers looking at and tagging images. ImageNet’s current database required fifty thousand low-paid workers, all employed using Amazon’s crowdsourcing labor platform, Amazon Mechanical Turk.

— Mark Durden